The Roland-Garros tournament allows the innovation department and the editorial teams of the sports department to test the evolution of these new formats and evaluate their potential within the future offerings of France Télévisions.

RGLAB also offers a fantastic opportunity to welcome numerous professionals from broadcasting and digital sectors, as well as technological partners, the advertising agency of France Télévisions, and the directors of the FFT. These interactions are focused on the evolution of uses and the future of television, with a mission: How to improve the experience and renew the offer for a young audience that traditionally turns away from linear television.

Several functional prototypes were presented at this RGLAB, allowing attendees to test different use cases on the latest mixed reality headsets and 3D screens, combining advances in artificial intelligence, spatial computing, and 3D video. These experiences complement the work done in previous editions on immersive spaces and explore new ways to utilize and interact with tournament content.

Vision Pro: An Immersive Revolution at RGLAB 2024

For the 2024 edition of RGLAB, the Innovation Department of France Télévisions presents its first demonstrator on Apple’s Vision Pro headset. This innovative application showcases the capabilities of the headset and the variety of content and experiences it can offer, especially in mixed reality, virtual reality, and spatial computing.

Among the key features of this application is the multi-court feature. This allows users to choose, via a 3D map of the Roland Garros stadium, the court they wish to watch live on high-resolution virtual screens integrated into the real environment. Additionally, two versions of 3D match replays are offered: one with animated characters and simplified gestures based on player positions and ball trajectories, and another with full motion capture providing greater precision of player movements. Users can also experience this in real size, immersed in a 3D replica of the Philippe Chatrier court. The application also integrates video converted to spatial video format on Leia Inc.'s Immersity AI platform, allowing matches to be viewed in stereoscopy.

These experiences fully leverage the capabilities of the Vision Pro, including interface manipulation via eye and hand tracking and exceptional video rendering quality, enabling an immersive mixed reality experience from the RGLAB terrace.

However, it is important to note that the Vision Pro headset also has some notable drawbacks. Its high price, relatively significant weight, and less-than-optimal comfort can pose challenges for some users. Despite these limitations, this demonstrator paves the way for the future of spectator experience, where connected headsets and glasses will allow users to personalize their experience and environment with interactive 3D screens and elements. With this technology, the Vision Pro offers a fascinating glimpse into the future modes of consuming sports content, making the viewing experience more customizable, interactive, and immersive than ever.

The Tournament on Quest 3

With the introduction of Meta’s Quest 3 headset, a new feature has emerged that will revolutionize how we perceive and use virtual reality headsets for the general public. The Quest 3, thanks to two color cameras added to the front, offers the “Passthrough” function. This transforms the VR headset into a mixed reality headset, eliminating one of the main drawbacks often associated with VR headsets: isolation. The strategically placed color cameras allow the outside world to be seen directly inside the headset, providing a continuous view of the real environment even with the headset on. The major advantage of this technology is that it allows virtual elements to be superimposed on reality, giving the impression that these virtual objects are an integral part of the real world. Thus, mixed reality, a blend of reality and virtual reality, becomes accessible to everyone.

Now that mixed reality is available to everyone, our team has explored this potential to propose concrete experiences at RGLAB that foreshadow the type of applications and services France Télévisions could offer in mixed reality, in the near or distant future. Two applications were developed, one with a service-oriented approach and the other more playful, featuring different types of content.

Multi-Courts: An application that represents a service offering that France Télévisions could consider in the near future. Specifically designed for Roland-Garros, it allows users to follow all matches live through virtual floating screens that can be moved and enlarged at will, to be positioned wherever desired. The choice of stream is made by interacting with a 3D map of Roland-Garros, also floating and movable at will, which opens the stream corresponding to the selected court. Up to three screens can be opened simultaneously, allowing users to follow three matches at the same time while benefiting from the sound of only the screen being watched. This concrete application could quickly be put into production to offer enriching experiences to our viewers.

The Tournament: A prototype that pushes the exploration of mixed reality further, highlighting the physical interactions possible with all available virtual objects, perfectly integrated into our environment. We have enabled the manipulation of virtual objects such as tennis balls or miniature trophies, demonstrating physics management and natural interactions. Many activities are offered, such as viewing a moving tennis player captured in volumetric video, providing an incredibly realistic result at life-size! The possibility to view a real exchange of a Roland-Garros match in motion capture in miniature form. And various “easter eggs,” like virtually breaking our surrounding walls to reveal a 3D modeled Philippe Chatrier court, giving the impression that our living room is in the middle of the court!

These prototypes highlight the potential of mixed reality and show that as it becomes more widely deployed among users, this technology will undoubtedly become essential. By blending the virtual with our daily lives, mixed reality erases the boundary between the real and the virtual.

2D to 3D Video Conversion

France Télévisions has partnered with the American technology company Leia Inc., which they met at the last CES in Las Vegas. The technology developed by Leia Inc. makes 3D accessible to everyone without the need for glasses, thanks to a combination of hardware innovations such as 2D-3D switchable lenses and eye-tracking, as well as software advancements.

Leia Inc. has developed a series of professional plugins for Unity and Unreal, along with a versatile SDK that includes a Media SDK for seamless 2D to 3D conversion and various XR tools and 3D applications, such as the 4K-8K 3D video player, 3D model visualization, and 3D video chat.

Additionally, LeiaSR™ displays, ranging from 6 to 32 inches, support both LCD and OLED technologies, ensuring a compromise-free visual experience in both 2D and 3D without any loss of brightness or ghosting. The demonstration presented at the RG Lab allowed viewers to relive a match sequence captured in 8K/2D and displayed on a 32-inch LeiaSR™ screen in 3D, offering exceptional viewing quality and total immersion on the Philippe Chatrier court.

Through its partnership with Leia Inc., France Télévisions is also exploring the conversion of 2D video content to 3D via the Immersity AI web platform. This allows matches to be viewed in 3D almost instantly in various formats (HD, 4K, 8K, or even spatial video format) on glasses-free 3D screens, integrating Leia Inc.'s AI technology. The Immersity technology uses AI to detect and enhance different shots in a video, utilizing this information to improve the depth effect.

This 3D screen technology, soon to be accessible to the general public on both PCs and Android smartphones, will facilitate access to an immersive 3D experience, especially in sports, gaming, and cinema. A new era of immersive entertainment without headsets is within reach. The experience is still in the making!

Virtual Production and Gaussian Splatting

The 3D Gaussian Splatting technology, recently highlighted by INRIA’s work, allows capturing the real world, whether an object or a place, from videos or photos taken from many different angles. This technology then transforms these images into a photorealistic 3D model that can be integrated into 3D rendering engines such as Unreal Engine or Unity.

Unlike traditional 3D objects made up of polygons, the 3D model created by Gaussian Splatting consists of a point cloud. Each point is rendered as a small “splat” of diffuse color, more or less extended, and the superimposition of these “splats” creates the image of the captured object or place from any viewing angle. To achieve this result, the point cloud is trained by comparing the real images of the shot until a satisfactory result is obtained. Each “splat” is governed by a Gaussian curve that defines its rendering (color, transparency, size, shape)based on the viewpoint. This feature captures how light behaves on different surfaces, whether reflective (like metal or mirror) or transparent with their refraction.

For this edition of RGLAB, we showcased this technology by creating a virtual production set using “Gaussian Splatting.” We took photos of the Philippe Chatrier court and, thanks to this technology and the expertise of CEA-List, recreated a 3D model of the court that we integrated as a virtual set. At RGLAB, we installed a green screen in front of which visitors could be filmed, giving the impression of being on the clay court of Philippe Chatrier, thanks to the striking realism offered by Gaussian Splatting. For even greater immersion at the heart of Roland-Garros, we offered the possibility to lift the Coupe des Mousquetaires or the Coupe Suzanne-Lenglen (models made in conventional 3D), using a tracker from the Vive Mars Camtrack system.

This technology can be used in many other situations, such as reproducing objects for augmented reality or creating virtual tours of iconic places with a result close to reality.

AI Conversational Agent

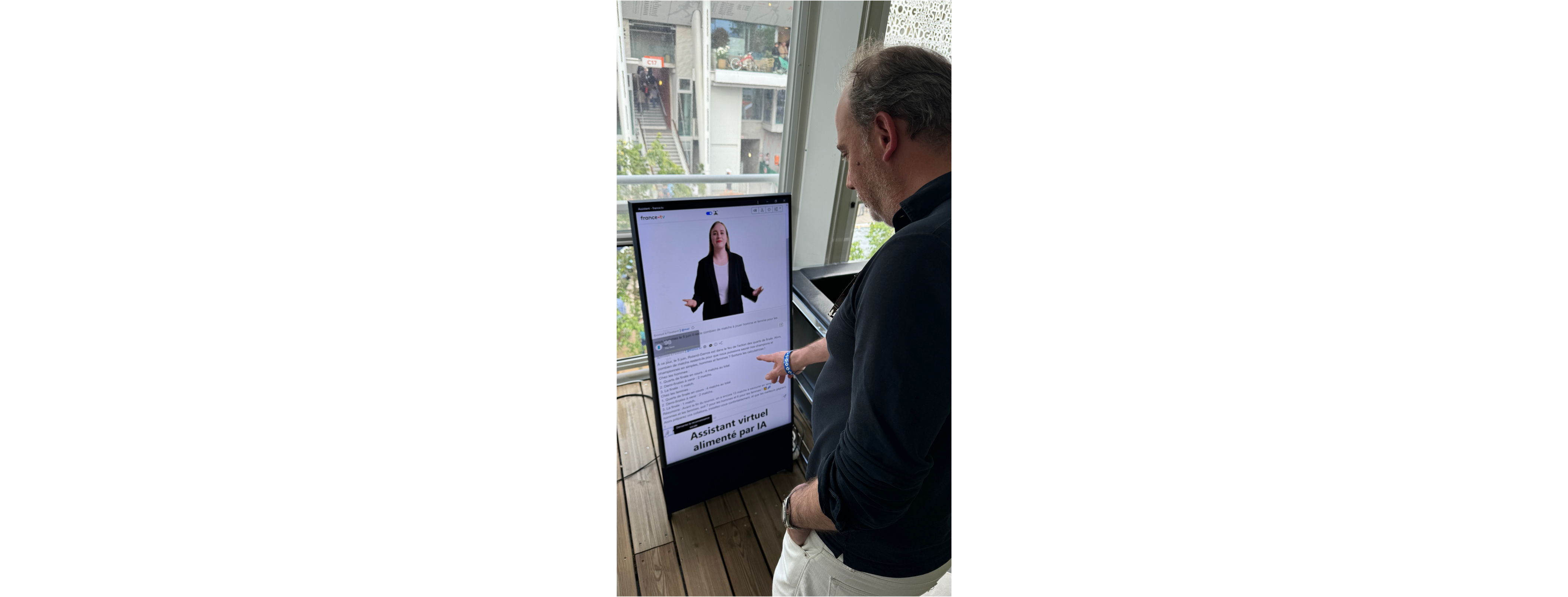

In the pursuit of research on artificial intelligence and avatars, the innovation department has developed a conversational agent in the form of a realistic video avatar, allowing it to answer questions about tennis and tournament news. This conversational agent is connected to various market LLMs and uses data from reference sites (franceinfo, FFT...).

How does it work?

Different technological componentshave been aggregated to create this video chatbot that allows users to interact and ask questions to a realistic avatar.

A first “speech to text” component uses Whisper ASR (OpenAI) to transcribe the user’s oral request into text that will feed the prompt sent to the selected LLM.

Indeed, this demonstrator allows the selection of different market LLMs (ChatGPT, Gemini, Mistral, LLaMa, Claude, Palm2…) to test different response levels, including the relevance and speed of the responses sent.

To improve the quality of responses and the freshness of data, a RAG (Retrieval Augmented Generation) has been implemented within the demonstrator. This vector database allows the prompt to be enriched with recent and verified data from selected reference sites, enhancing the reliability of the LLM's responses. Depending on user requests, it can also query the FFT results database in real-time to supplement its responses to users.

The response formatted by the LLM according to the chosen criteria (response length, tone, language, etc.) is then sent to the HEYGEN platform, which converts the text response into speech and generates a photorealistic avatar video that is broadcast live to the user (via WebRTC).

The study of such a device allows us to explore various issues related to the use of these generative artificial intelligence technologies (costs, reliability, ethics, hallucination...) before considering the deployment of such a conversational agent within the digital services of France Télévisions that may express the need.

About Leia Inc:

Leia Inc. offers the only technology providing immersive experiences to consumers on any device—today. With its integrated platform of hardware, software and AI working together, LeiaSR 2D-to-3D switchable displays offer unparalleled immersion. While its Immersity AI web application, built on Neural Depth technology, turns content into immersive content by converting images and videos into 3D.

About the CEA:

CEA-List is one of the three institutes under the Directorate of Technological Research at CEA. Experts in the field of intelligent digital systems, CEA-List supports the competitiveness of companies through technology transfer and innovation aimed at the industrial sector.

Back

Back

R&D

R&D