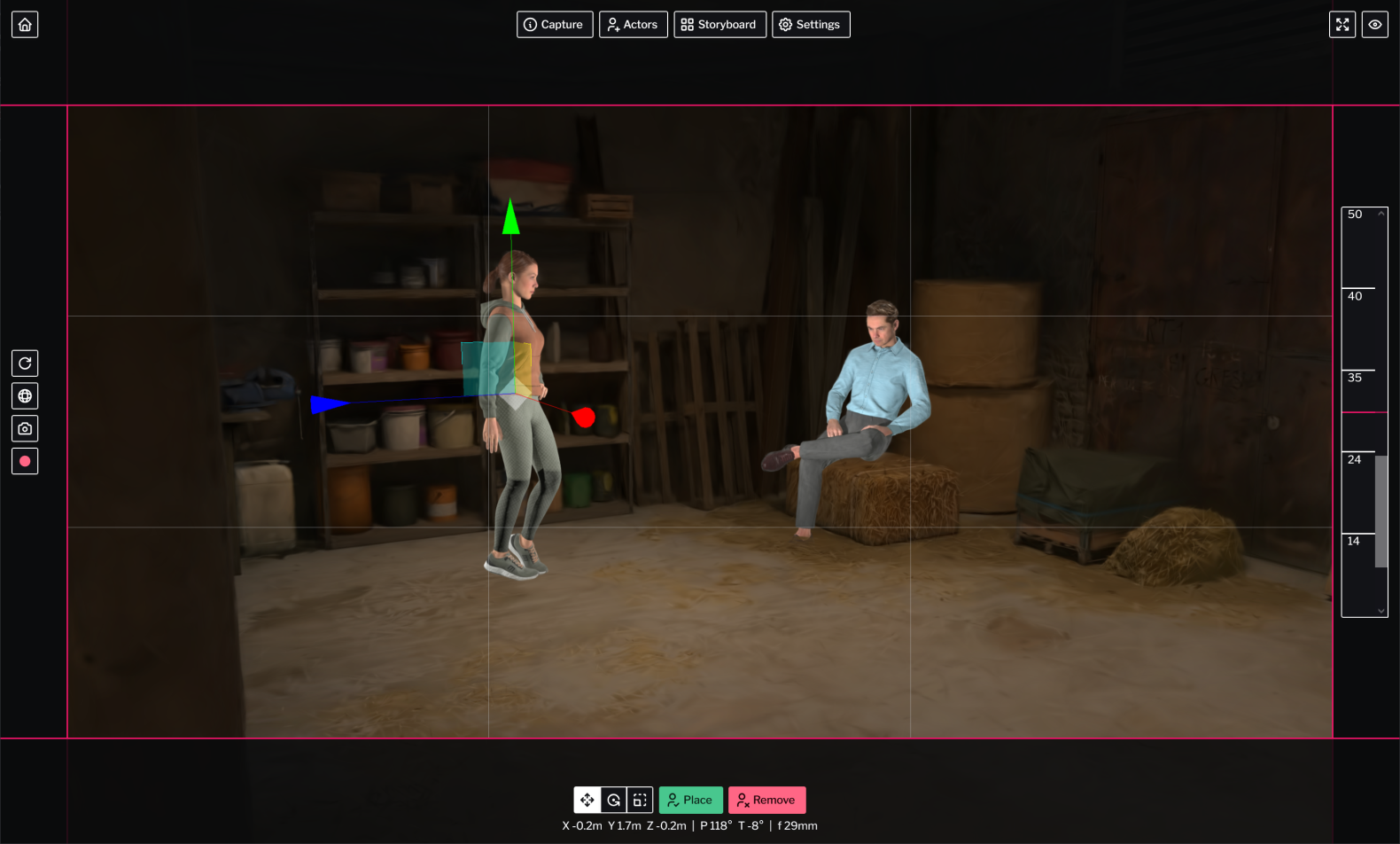

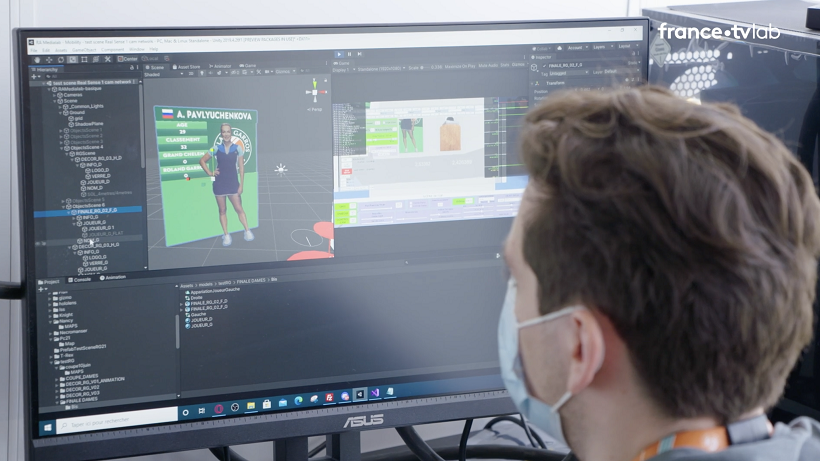

For this 2021 edition still marked by health restrictions related to Covid 19, it was not possible for us to get the planned partners on board and we had to think of possible alternatives. We have therefore decided, in collaboration with the editorial teams of the Sports Department of France Télévisions, to reflect on the use of Augmented Reality (AR) for the Roland Garros broadcast. These reflections led us to the production of two sequences in Augmented Reality presenting the finalists, ladies and gentlemen, on outdoor sets. The production of the 3D models was entrusted to “La Fabrique” via the Nancy videographic workshop. We have therefore evolved our own“JP Tech” AR solution to enable it to operate, on the move, in the aisles of Roland Garros in order to produce these sequences.

Outdoors and on the move Augmented Reality (AR) JPTech allows you to shoot AR video footage outside TV studios, without any heavy infrastructure, using only lightweight, wireless and low-cost equipment!

Conditions that encourage innovation

Nowadays, AR devices for broadcast are limited to television studios or, if you want to use them outdoors, require significant logistics and costs, with many technical constraints such as access to a power outlet.

After experimenting with AR outdoors at low cost, we decided to make an evolution to add to this solution a dimension of mobility. Indeed, our previous solution for outdoor AR was based on equipment that had to be plugged into the mains power source. The tracking system in particular, which used Valve's Lighthouse system, required the deployment of Lighthouse beacons requiring 220v power and the HTC Vive headset had to be connected to power and to the PC running the AR software. To bring mobility, it was necessary to find alternative solutions to only use devices that run on battery and transmit tracking, video and sound information wirelessly. This gives the film crew more freedom of movement and choices of location for the AR footage.

Knowing how to bounce back and adapt quickly, the hallmark of innovation

Knowing how to bounce back and adapt quickly, the hallmark of innovation

The tracking system, the core of AR, is the crucial element in this situation, because all the other links in the chain have been around for a quite some time and are well known and mastered. However, a low-cost, wireless turn-key AR-dedicated tracking system that operates outdoors without infrastructure, and is reliable enough for broadcast, does not exist today. Based on this observation, we had to find a system to meet these criteria.

Initially we chose the Realsense T265 camera, offering 6DOF spatial tracking (6 degrees of freedom, which means that the position of the device and its rotation on 3 axes are given at all times) indoors and outdoors. It is a space-saving device that connects via USB. This camera is compatible with many software and in particular Unity, the real-time 3D engine on which the solution is developed.

The results of the tests with this device were found to be very correct in an indoor or low light environment, much less outdoors and in broad daylight. However, the reliability of the tracking is essential, the slightest aberration or anomaly in the position provided by the Realsense generates inconsistent movements of the 3d images compared to the real images, instantly breaking the final result. As a result, we had to find another tracking system that was more efficient and reliable outdoors. After some development and testing, our choice fell on the use of the Oculus Quest VR (Virtual Reality) headset. Indeed, this headset meets all the criteria, it is inexpensive, autonomous, wireless, has wi-fi, and above all, it has an integrated functionality of advanced precise and reliable inside-out spatial tracking, which has the advantage to function also in broad daylight.

This way we retrieve real-time tracking information from the Oculus Quest to transmit them to our RA PC using a modem connected to a 4G VPN provided by Orange and guaranteeing bandwidth regardless of the number of 4G users in the zone, to be sure that the data is transmitted without loss and without too much latency. Indeed, the Oculus Quest sends its position and orientation in space 72 times per second over the network with millimeter precision ! Once the tracking data has been received we can generate the 3D images from the same angle as the real video camera.

However, tracking information and video are not synchronized when the computer receives them, so we have performed this step of the process manually.

In general, the video, by its wireless transmission over IP, reaches us with a delay, especially in relation to the tracking information which is transmitted much more quickly. To answer this issue, our AR software is able to add delay to the processing of tracking information to generate 3D images. Nevertheless, this delay to be added must be determined manually by observing the result; we compare the movements of the video camera to the movements of 3d objects. If the 3D objects move in advance, we add the delay and conversely if they are late, we remove the delay, and this until the perfect synchronization of the 2 movements.

The other difficulty is providing a 3D image with the same perspectives as the real video camera, and there are 2 underlying issues:

- The tracking system provides its precise position, and although the system is fixedly attached to the camera, there is a delta between the position of the camera and the position of the tracking system.

- The camera and its optics provide an image with a specific field of view

To solve the delta problem, we know that since the clip is fixed between the headset and the camera, we must measure and apply this delta in our calculations. So we measure physically (height, length, width) the distance between the headset and the camera and then we enter these distances into our AR software. Another point of attention; the orientation of the headset may be different from the camera and therefore requires a procedure to be corrected in the software.

For the field of view issue, we set up a procedure to compare the size of a real world object and a 3d equivalent, then we adjust parameters to match the sizes.

By following our calibration procedure, which is specific to each camera, we can generate 3D images from the same perspective as the real video camera.

Our system involves a constraint: the focal length of the camera must remain fixed because we do not retrieve the change of focal information from the camera, therefore we cannot use the zoom. So to simulate a zoom, the cameraman must physically move forward or backward.

Mobility brings the ability to add AR to broadcast footage shot in locations inaccessible to conventional AR production until now. However our system also provides the potential ability to move on larger scales! Indeed, this technology should eventually allow us to imagine AR sequences where the cameraman could move about twenty meters, offering scriptwriting possibilities on larger-scale.

Stay tuned!

Back

Back

R&D

R&D